Install Hadoop On Windows Without Cygwin Commands List

Build and Install Hadoop 2.x or newer on Windows 1. Introduction Hadoop version 2.2 onwards includes native support for Windows. Berliner platz 3 neu pdf reader.

Jan 6, 2018 - Install Hadoop On Windows Without Cygwin Commands For Windows. A long list of commands running for some time while the build process.

The official Apache Hadoop releases do not include Windows binaries (yet, as of January 2014). However building a Windows package from the sources is fairly straightforward. Hadoop is a complex system with many components.

Some familiarity at a high level is helpful before attempting to build or install it or the first time. Familiarity with Java is necessary in case you need to troubleshoot. Building Hadoop Core for Windows 2.1. Choose target OS version The Hadoop developers have used Windows Server 2008 and Windows Server 2008 R2 during development and testing. Windows Vista and Windows 7 are also likely to work because of the Win32 API similarities with the respective server SKUs. We have not tested on Windows XP or any earlier versions of Windows and these are not likely to work.

Any issues reported on Windows XP or earlier will be closed as Invalid. Do not attempt to run the installation from within Cygwin. Cygwin is neither required nor supported. Choose Java Version and set JAVA_HOME Oracle JDK versions 1.7 and 1.6 have been tested by the Hadoop developers and are known to work. Make sure that JAVA_HOME is set in your environment and does not contain any spaces.

If your default Java installation directory has spaces then you must use the instead e.g. C: Progra~1 Java. Instead of c: Program Files Java. Getting Hadoop sources The current stable release as of August 2014 is 2.5. The source distribution can be retrieved from the ASF download server or using subversion or git. • From the or a mirror.

• Subversion URL: • Git repository URL: git://git.apache.org/hadoop-common.git. After downloading the sources via git, switch to the stable 2.5 using git checkout branch-2.5, or use the appropriate branch name if you are targeting a newer version. Installing Dependencies and Setting up Environment for Building The file in the root of the source tree has detailed information on the list of requirements and how to install them. It also includes information on setting up the environment and a few quirks that are specific to Windows.

It is strongly recommended that you read and understand it before proceeding. A few words on Native IO support Hadoop on Linux includes optional Native IO support. However Native IO is mandatory on Windows and without it you will not be able to get your installation working. You must follow all the instructions from BUILDING.txt to ensure that Native IO support is built correctly. Build and Copy the Package files To build a binary distribution run the following command from the root of the source tree. Mvn package -Pdist,native-win -DskipTests -Dtar Note that this command must be run from a Windows SDK command prompt as documented in BUILDING.txt.

You can still add Wii Points until March 26, 2018, and purchase content on the Wii Shop Channel until January 30, 2019. In the future, we will be closing all services related to the Wii Shop Channel, including redownloading purchased WiiWare, Virtual Console titles, and Wii Channel, as well as Wii System Transfer Tool, which transfers data from Wii to the Wii U system. Wii u transfer tool wad soft. Not necessarily, according to the: You can still add Wii Points until March 26, 2018, and purchase content on the Wii Shop Channel until January 30, 2019. Nintendo has mentioned shutting downloads down sometime after that, but has given no specific date On January 30, 2019, we plan to close the Wii Shop Channel, which has been available on Wii systems since December 2006. We sincerely thank our loyal customers for their support.

A successful build generates a binary hadoop.tar.gz package in hadoop-dist target. The Hadoop version is present in the package file name.

If you are targeting a different version then the package name will be different. Installation Pick a target directory for installing the package. We use c: deploy as an example. Extract the tar.gz file (e.g. Hadoop-2.5.0.tar.gz) under c: deploy. This will yield a directory structure like the following. If installing a multi-node cluster, then repeat this step on every node.

C: deploy>dir Volume in drive C has no label. Volume Serial Number is 9D1F-7BAC Directory of C: deploy 08:11 AM.

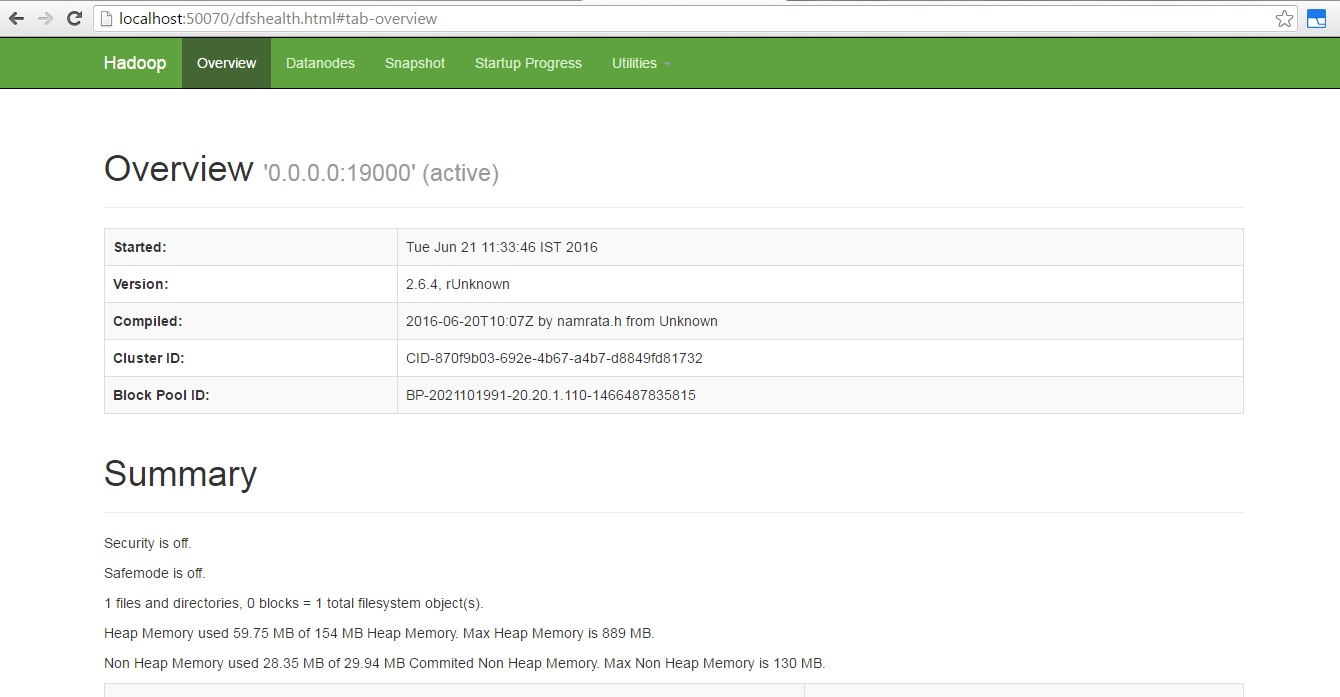

08:28 AM bin 08:28 AM etc 08:28 AM include 08:28 AM libexec 08:28 AM sbin 08:28 AM share 0 File(s) 0 bytes 3. Starting a Single Node (pseudo-distributed) Cluster This section describes the absolute minimum configuration required to start a Single Node (pseudo-distributed) cluster and also run an example job. Example HDFS Configuration Before you can start the Hadoop Daemons you will need to make a few edits to configuration files. The configuration file templates will all be found in c: deploy etc hadoop, assuming your installation directory is c: deploy.

First edit the file hadoop-env.cmd to add the following lines near the end of the file. Set HADOOP_PREFIX=c: deploy set HADOOP_CONF_DIR=%HADOOP_PREFIX% etc hadoop set YARN_CONF_DIR=%HADOOP_CONF_DIR% set PATH=%PATH%;%HADOOP_PREFIX% bin Edit or create the file core-site.xml and make sure it has the following configuration key: fs.default.name hdfs://0.0.0.0:19000 Edit or create the file hdfs-site.xml and add the following configuration key: dfs.replication 1 Finally, edit or create the file slaves and make sure it has the following entry: localhost The default configuration puts the HDFS metadata and data files under tmp on the current drive. In the above example this would be c: tmp. For your first test setup you can just leave it at the default.